Hey friends, I’m Akash!

Software Synthesis analyses the intersection of AI, software and GTM strategy. Join thousands of founders, operators and investors from leading companies for weekly insights.

You can always reach me at akash@earlybird.com to exchange notes!

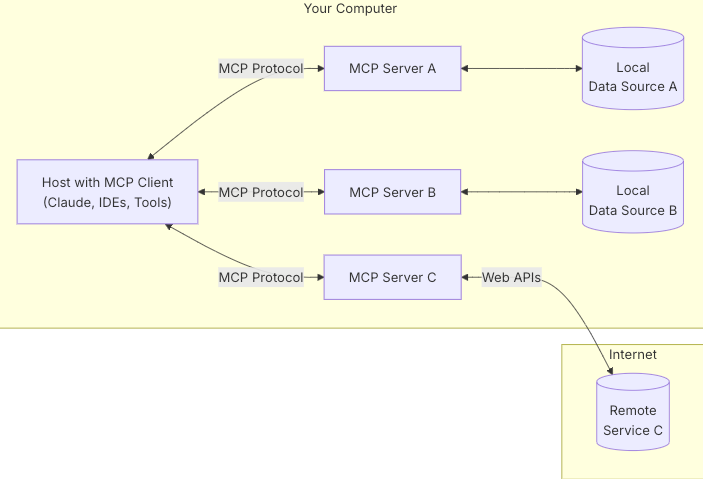

As AI infrastructure startups are building for agent-computer interfaces, Anthropic’s Model Context Protocol (MCP) is gaining traction as a solution to the problem of memory.

The agentic pattern often deals with information that exceeds a model’s context limit. A memory system that supplements the model’s context in handling information can significantly enhance an agent’s capabilities.

Chip HuyenLarge language models possess vast knowledge, but they're trapped in an eternal present moment. While they can draw from the collected wisdom of the internet, they can't form new memories or learn from experience: beyond their weights, they are completely stateless. Every interaction starts anew, bound by the static knowledge captured in their weights. As a result, most “agents” are more akin to LLM-based workflows, rather than agents in the traditional sense.

Charles PackerLet’s walk through an example of a Sales AI tool wanting to build an integration into Salesforce.

MCP Host: Your Sales AI tool

This is the main application trying to access Salesforce data

MCP Client: The protocol client embedded in your Sales AI tool

Handles the actual connections to MCP servers

Part of your Sales AI tool's infrastructure

MCP Server: The Salesforce MCP server

Could be official (if Salesforce built it)

Could be community-built

Could be custom-built by your team

Translates MCP protocol into Salesforce API calls

Local Data Sources: Could be:

Local sales spreadsheets

Cached Salesforce data

Local CRM databases

Remote Services: Salesforce's API

The actual cloud service being accessed

Other examples might include:

Hubspot API

LinkedIn Sales Navigator API

Email marketing services

The contrast between developing custom integrations for every application with and without MCP is:

Development Effort:

Traditional: Write custom code for each API integration

MCP: Connect to pre-built MCP servers that handle the integration

Context Management:

Traditional: You maintain context between API calls yourself

Function calls are isolated transactions

MCP: Protocol maintains session context automatically

MCP maintains context across multiple interactions

Servers can adapt available tools based on conversation flow

Error Handling:

Traditional: Handle each API's unique error patterns

MCP: Standardised error handling across all services

AI-Specific Features:

Traditional: Build your own prompting and tool discovery

MCP: Built-in support for:

Dynamic tool discovery

Prompt templates

Progressive disclosure of capabilities

MCP servers can dynamically expose capabilities

Tools can be discovered and composed at runtime

Function calls are just request-response

MCP supports streaming, progressive updates

Better for long-running or complex operations

Companies like Stripe, Neo4j and Cloudflare already offer production-ready MCP servers - you can see the full repository of servers developed by the community or by service providers.

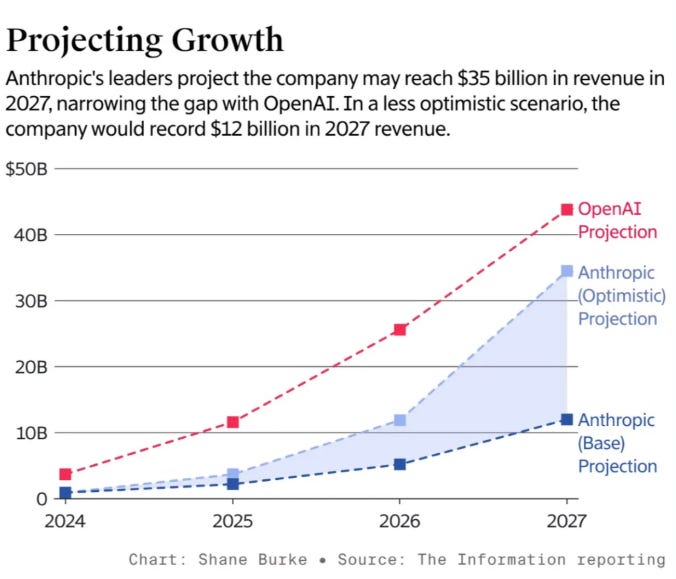

As Anthropic vies to narrow the gap with OpenAI, MCP’s growth could be key.

The early traction presents some interesting scenarios.

For data sources (often incumbent systems of record), as more AI apps use MCP, there's more incentive to build official MCP servers - similar to how companies build official APIs once there's enough demand

For AI companies, more MCP servers = more capabilities without building custom integrations. Companies can mix and match servers (e.g., combine Salesforce + Gmail + Calendar servers), maintain state and deliver truly agentic experiences across an array of applications.

An early pattern seems to be:

Community builds initial servers

As MCP gains adoption, companies build official versions

AI tools can choose between official, community, or custom implementations

There might be a parallel between the evolution of container registries (Docker Hub/ECR/GitLab) and how MCP's ecosystem is developing.

Vendor-Certified (like ECR):

ECR: AWS's managed, enterprise-focused registry

MCP Equivalent: Anthropic's official registry (83 servers)

Characteristics: High compliance, enterprise features, strong security

Community (like Docker Hub):

Docker Hub: Public, widely-used container registry

MCP Equivalent: GitHub/Hugging Face MCP servers

Characteristics: Low barrier to entry, experimental use cases, community-driven

Enterprise Private (like GitLab):

GitLab: Self-hosted, integrated with development workflows

MCP Equivalent: Private company MCP registries

Characteristics: Custom compliance, internal tools

There are several other second-order consequences.

By establishing MCP as the de facto standard, Anthropic could become the "Intel Inside" of enterprise AI. Even if MCP remains open-source, Anthropic gains influence through:

First-mover advantage in protocol governance

Enterprise support contracts for mission-critical deployments

Post-MCP, engineering costs for integrations will continue falling precipitously. This cost compression will further accentuate the deflationary forces driving more startup creation.

There will be security and governance challenges - attackers could poison MCP registries with malicious servers masquerading as legitimate services.

MCP will also enable enterprises to operationalise AI agents at a faster rate. Legacy and new applications can be exposed as MCP servers - if the service provider hasn’t already, then the community might build it soon enough.