Backcasting From Known AI Scaling Laws

Realising plausible futures enabled by the known quantities of AI

Hey friends! I’m Akash, partnering with founders at the earliest stages at Earlybird.

Software Synthesis is where I connect the dots on software and company building strategy.

You can reach me at akash@earlybird.com. If you liked this piece, feel free to share this blog with a friend who might enjoy it too!

Current subscribers: 4,850

Known Knowns, Known Unknowns, Unknown Unknowns

With respect to current AI scaling laws, the realm of plausible futures for entrepreneurs to realise is sufficiently compelling, without taking into account any unknown unknowns.

For example, we know scaling laws are holding for now, but we don’t know if they’ll continue indefinitely. We also don’t know where another breakthrough in model capabilities might come. The most likely sources we know of are improvements in algorithms, scaling data in novel ways, and continue construction of data centres to scale compute.

We also know we’re going to hit a data wall in around four years (at least for high quality public data), but we don’t know what new scaling techniques will be devised in the interim to compensate for a scarcity of data.

The unknown unknowns are of course.. unknowable. An example might be sudden advancements in capabilities that deviate from our current mapping of scaling laws. Other examples are the ways that hard technologies will interact - how will cheap, unlimited fusion energy remove bottlenecks on the energy consumption of the frontier class of models in five years from now?

What level of ‘world knowledge’ will multimodal foundation models possess once they’ve been fed data across modalities? Over the 70 years of AI’s existence, we’ve constantly moved the goalposts for what constitutes true AI - will we see AI pass the ARC-AGI test of ‘a system that can efficiently acquire new skills outside of its training data’ in the not-so-distant future? Such intelligence, widely acknowledged as true general intelligence, will have profound second order effects that are difficult to grasp (nor can we predict with too much confidence if or when it’ll be achieved).

Though there are many unknown unknowns worth speculating about, the point I’m making is that by purely studying the known knowns and known unknowns, entrepreneurs can already create value in this paradigm shift.

Backcasting from the future

Mike Maples Jr. of Floodgate came up with the framework of backcasting for building breakthrough companies. The principle tenets are:

Identify inflections

Backcast from possible futures

Gather breakthrough insights

This framing fits the scaling laws and their known quantities well.

Inflections

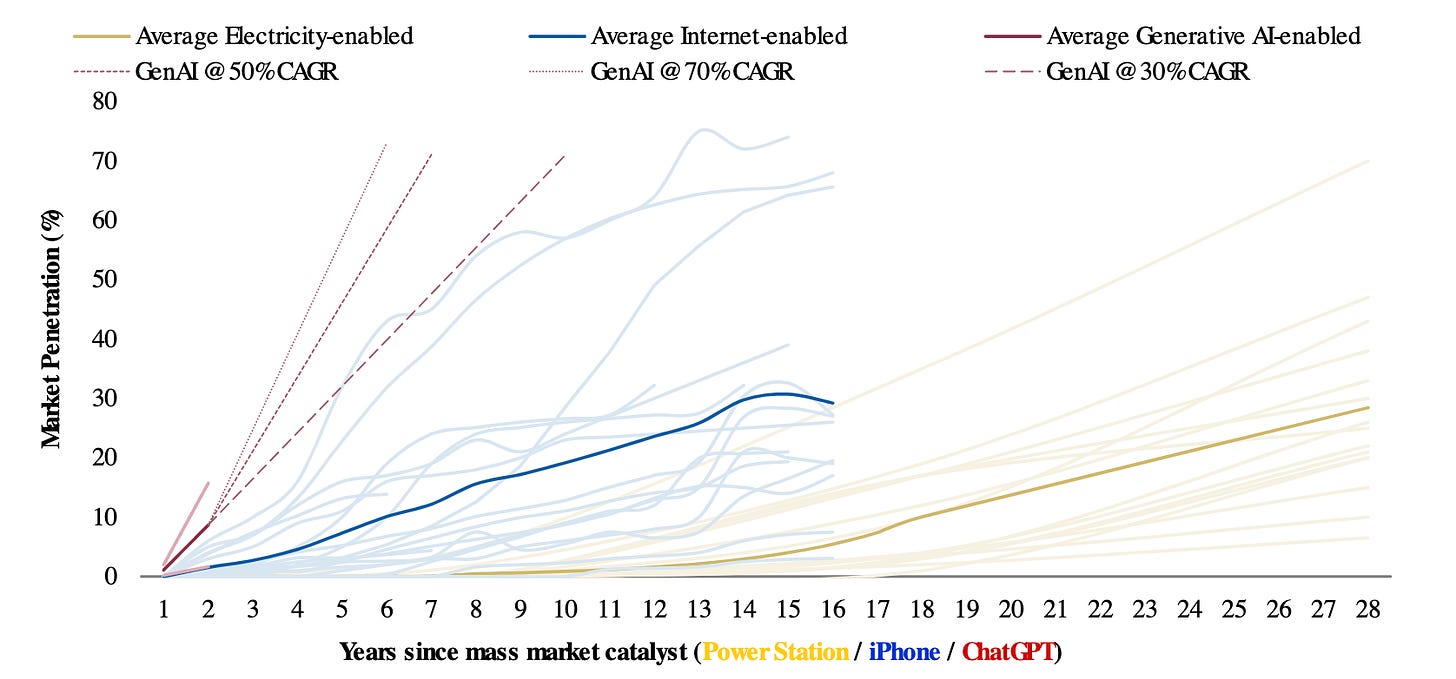

The ‘ChatGPT moment’ dwarfed previous catalytic events that spurred technology diffusion like the adoption of internet services following the iPhone or adjacencies that electricity spawned.

This seismic shift of course followed the ‘unhobbling’ of latent model capabilities by simply building a front-end for GPT-3.5 and some instruction-tuning. It’s on this evidence that some argue that enhancing current frontier base model capabilities alone would deliver significant economic value.

If you literally stopped all AI progress today and you just tried to make valuable products with the existing technologies, I think you could and I’m not suggesting that progress should be stopped, I’m just saying there’s a lot of latent economic value in the existing capabilities of these models, even if there’s no deeper breakthrough.

The hyperscalers will invest hundreds of billions regardless, lest they lose the existential game of LLM poker. The stakes are just too high.

We can therefore be assured of several more breakthroughs in capabilities as compute scales, notwithstanding . The services-to-software rotation will march on. The $600B question of recouping revenue commensurate with the capex spend will be answered, just on a longer timeframe.

Aside from the overarching scaling laws, there are several other consequential trends.

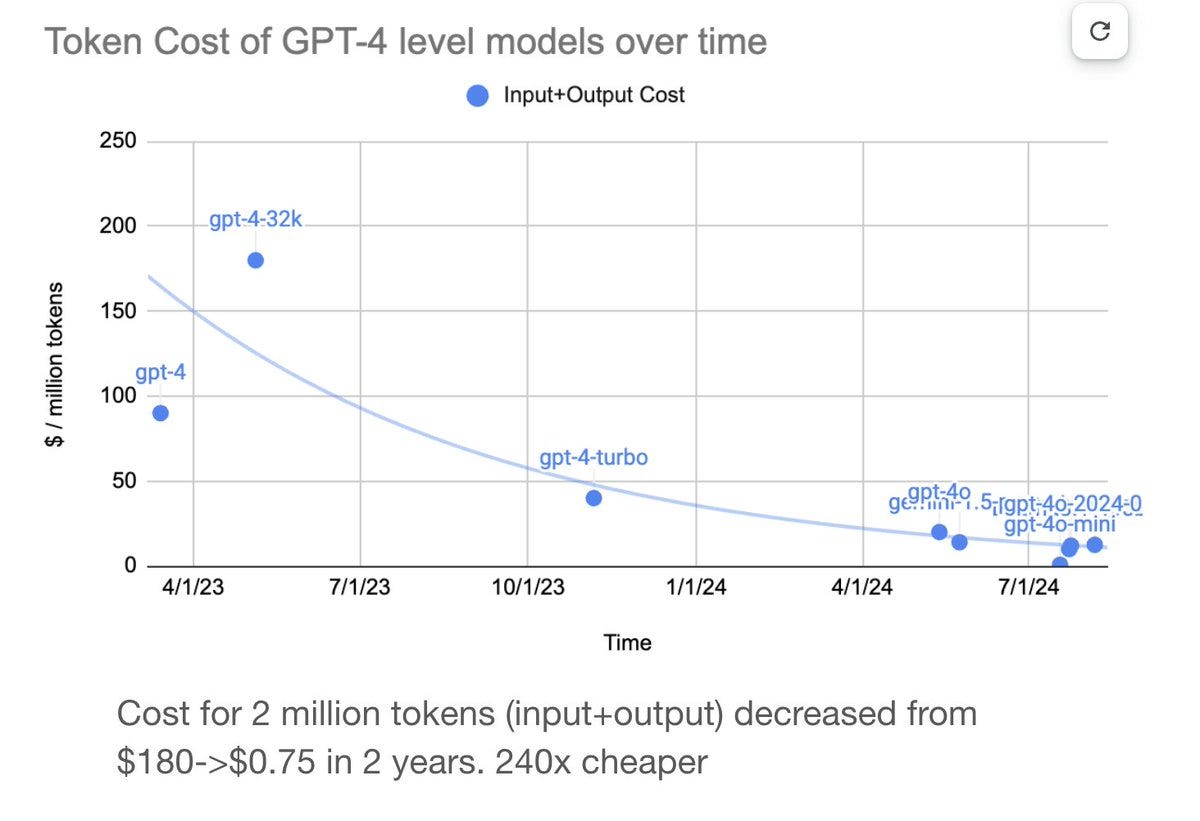

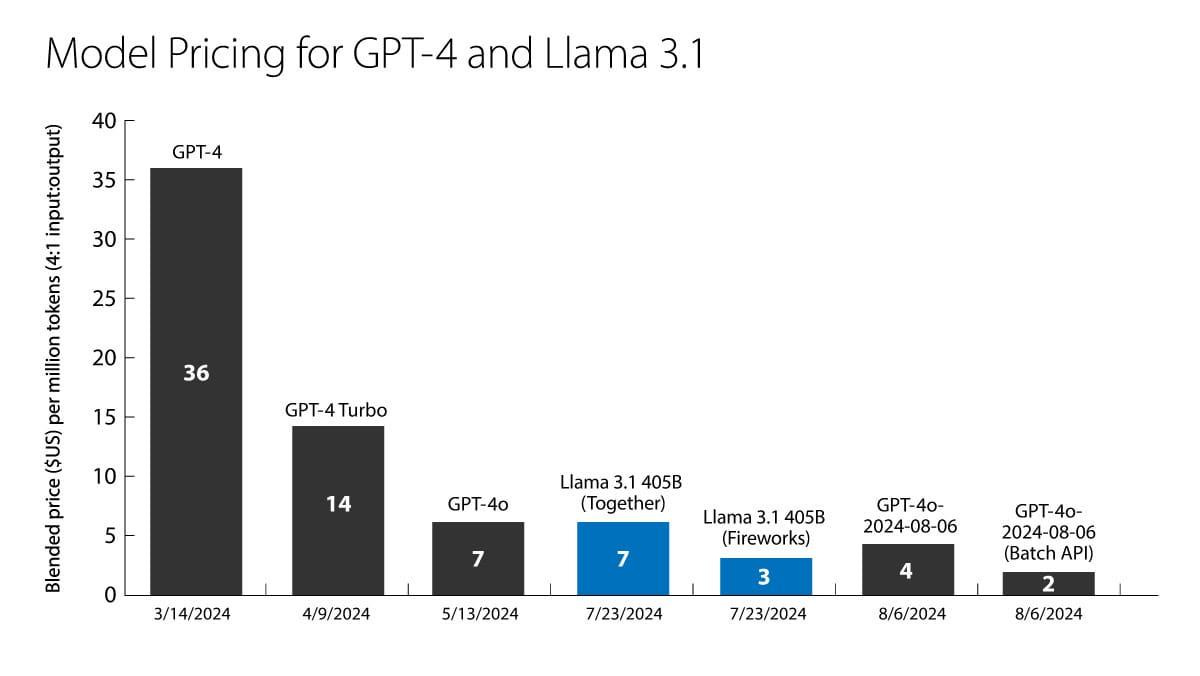

The cost of inference is coming down drastically, spurred by the competitiveness of open source models.

GPT-4o tokens now cost $4 per million tokens (using a blended rate that assumes 80% input and 20% output tokens). GPT-4 cost $36 per million tokens at its initial release in March 2023. This price reduction over 17 months corresponds to about a 79% drop in price per year.

As we’ve discussed before, and as Gavin Baker highlighted during his excellent appearance on Invest Like The Best last week, the cost of intelligence is falling rapidly. This has several implications for the economics of AI applications that can just as easily be powered by the n-1 class of cheaper models as they can by frontier models, especially as the gulf in inference costs widens.

If you believe in scaling laws, you believe AI will have very high marginal cost. Because of a variety of things that we're going to talk, those marginal costs go down really, really quickly, but they're still really, really high at the leading edge for models

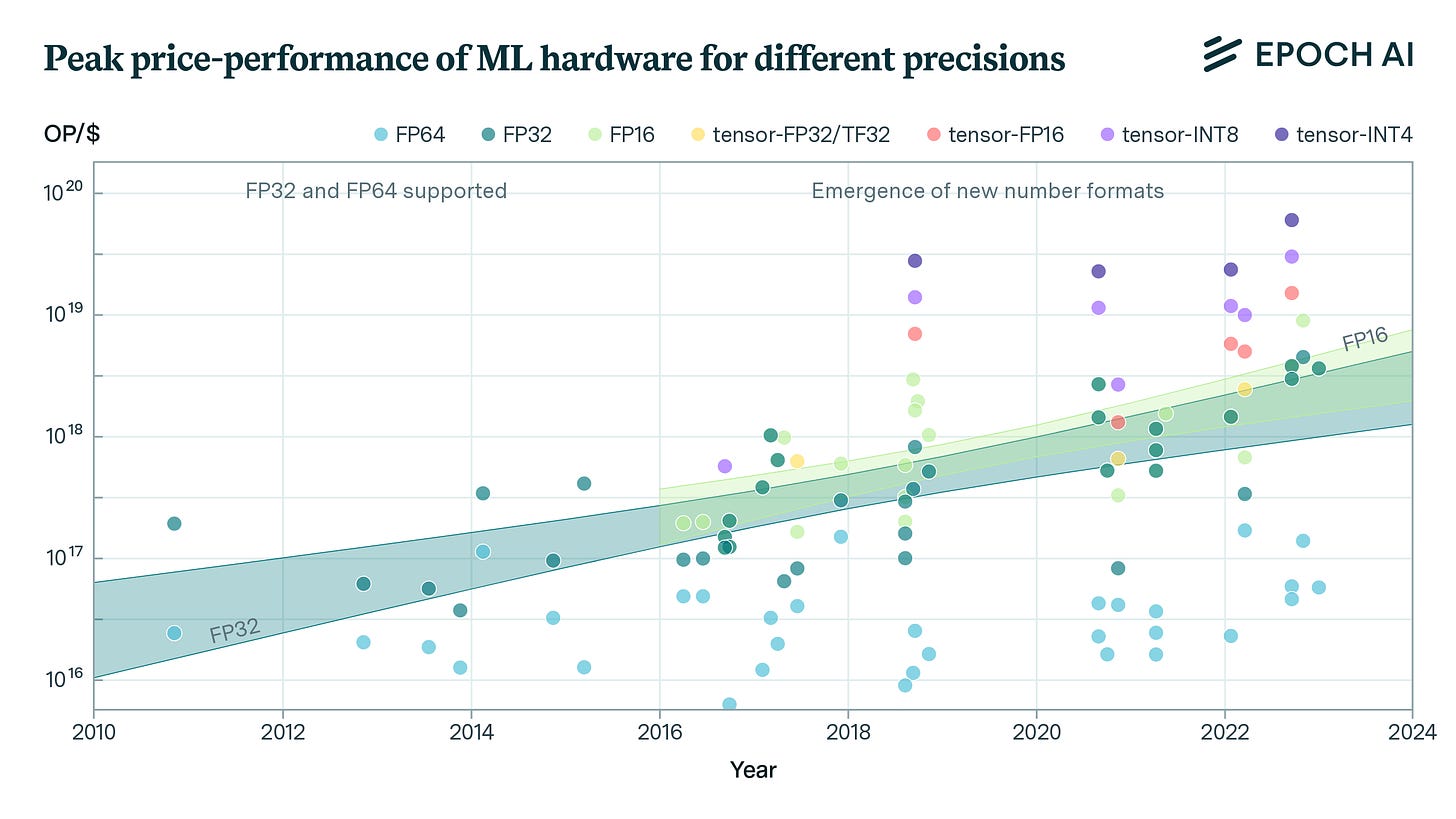

Hardware utilisation is getting better, with lots of room to improve.

The physical compute required to achieve a given performance in language models is declining at a rate of 3 times per year (visit Epoch for a dashboard of other metrics).

Backcasting

Backcasting is the opposite of forecasting.

It works best when you want to create something extraordinarily unique and surprising. Something that’s not incrementally better, but exponentially different and unique.

As you backcast, you are likely to find that many current assumptions about products and markets won’t be true in the future. Your goal is to find insights about the future that are non-consensus and right.

Starting from the future and working backwards is the second key to finding a breakthrough.

Backcasting plausible, possible, and preposterous futures based on the known quantities about AI comes with the challenge of fundamentally rethinking the way we’ve interacted with computers for decades.

Plausible futures are ones where agents interact with databases, or digital workers replace humans for large swathes of OpEx. These are perfectly reasonable outcomes of scaling laws holding.

Possible futures are more radical in their scope, leveraging technologies in groundbreaking ways that only seem obvious after the fact. It’s beyond me to posit a good example, but it’s striking that most applications of AI to date still feel incremental.

Preposterous futures are even harder to bring to life with an example. Endeavours like Sakana’s ambition for LLMs to accelerate scientific research might fuel some of the preposterous futures that are hard to even imagine.

Gathering insights

Mike Maples has an excellent description for those who excel at backcasting:

“Seers” are people who you believe are living in the future around a given inflection. They might be entrepreneurs, researchers, academics, futurists, hobbyists, Angel investors, and the like. These are the people you want to spend time with.

This is what reinforces tech hubs. It’s how AI has breathed new life into San Francisco.

On that front we’ve got a long way to go in Europe. In my discussions with founders and prospective entrepreneurs, I constantly hear frustration with the lack of ‘seer’ density. It’s getting better, but not fast enough. I’ve enjoyed sparring with friends who are looking to build communities to rectify this.

The inflection is clear. The technology will continue advancing, without having to account for unforeseeable breakthroughs.

Backcasting from the future becomes easier the more time you spend with other ‘seers’. You must surround yourself with those living in the future.

Curated Content

The Right Kind of Stubborn by Paul Graham

Counterintuitive advice for building AI products by

print(“Hello AI World”): Evolution of the developer economy in the age of AI by

Your guide to GTM metrics 2.0 by

Quote of the week

‘Everything around you that you call life was made up by people that were no smarter than you. And you can change it. You can influence it. You can build your own things that other people can use. Once you learn that, you’ll never be the same again.’

Steve Jobs

Thank you for reading. If you liked it, share it with your friends, colleagues, and anyone that wants to get smarter on startup strategy. Subscribe below and find me on LinkedIn or Twitter.