Pricing & Packaging In The AI Paradigm

How will the cost of inference be distributed in the value chain

Join over 2,300 founders, operators and investors for Missives on software strategy and technology trends here:

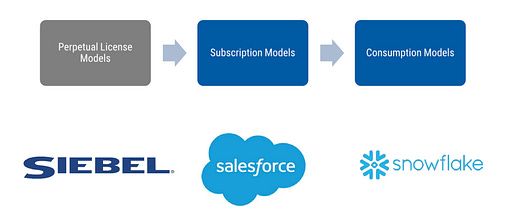

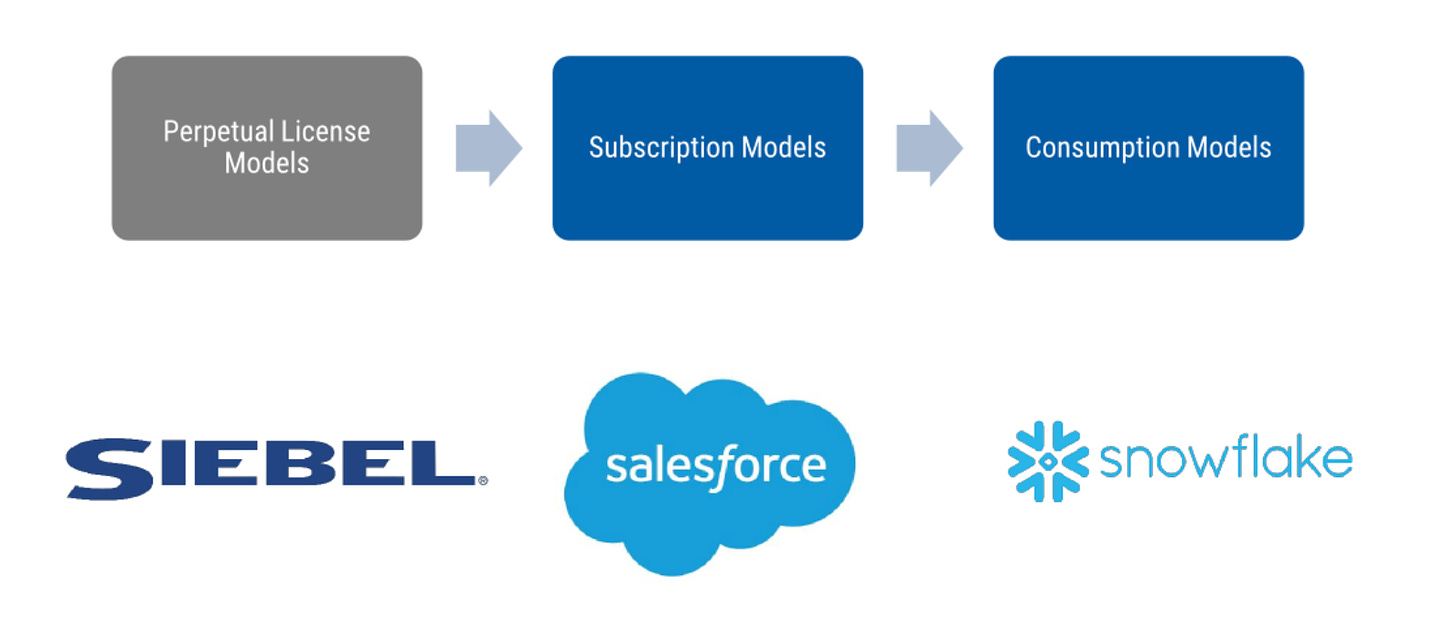

The software business model’s evolution from on-premise licenses to usage-based consumption has loosely followed the trajectory of sales motions becoming more product-led over time.

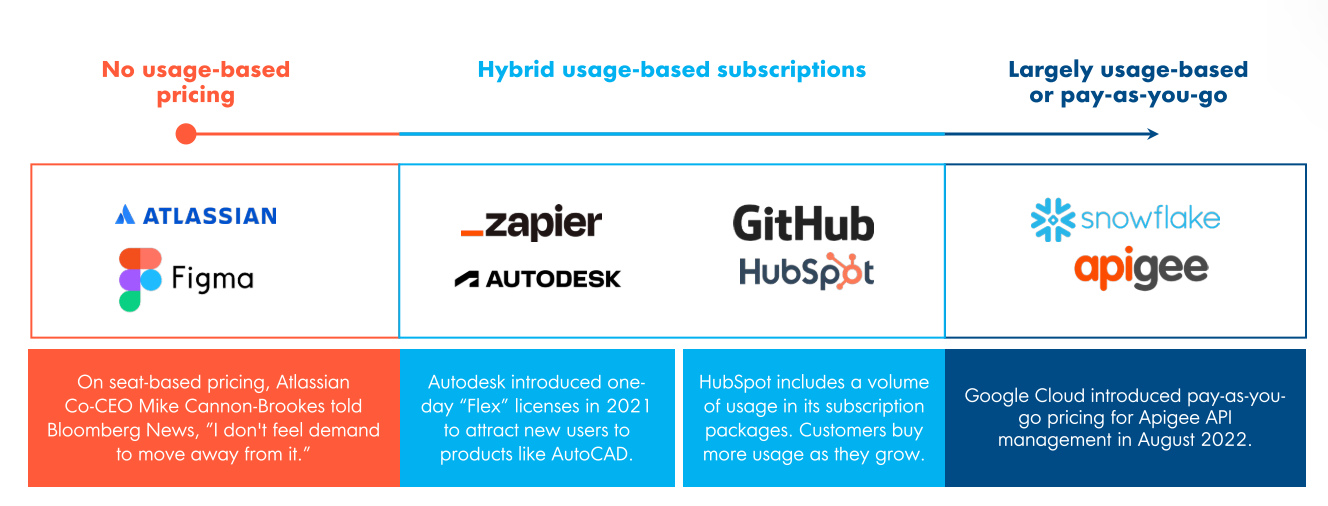

In 2022, 61% of SaaS companies implemented some form of usage-based pricing, running the gamut from pure infrastructure to application software.

Whilst pure-usage based pricing is on the rise, it’s the model that exhibits the most volatility, as sales cycles lengthen relative to the subscription model.

In light of this, the hybrid pricing model combining subscription and tiered usage is the biggest accelerant in SaaS - e.g. HubSpot who offer a set volume of usage in its subscription options.

Although the shift to hybrid or pure usage-models may seem like an intractable shift across SaaS, it’s application will not be uniform across application and infrastructure software. The test of its efficacy is whether the usage of your product can be tightly coupled with value creation (ideally a revenue metric) for your customer:

Does the use of your software line up with a set of metrics that you can actually assign a revenue-oriented metric to? Metrics you can easily assess and monitor in a way that creates value for your customer, and is scalable for your revenue model?

AI-enhanced scale-ups and de novo applications alike will have to grapple with this test as they experiment with pricing and packaging, especially where limited value is created relative to the non-trivial cost of inference.

In two separate interviews for Bessemer and Mario Gabriele, Intercom co-founder and Chief Strategy Officer Des Traynor noted the prohibitive costs of rolling out LLM capabilities indiscriminately across the Intercom product:

A worst case scenario would look like adding expensive generative AI to an extremely high frequency and reasonably low-value feature. With millions of users, this experiment could bankrupt a business without driving real customer value.

There are features we could build that we won’t because they’re too expensive. For example, we could use GPT-4 to summarize every conversation that every customer has with every business on Intercom. We could do that, but it would cost a lot of money because Intercom powers 500 million conversations a month. That’s a lot of API calls, right?

Earlier this week, Ramp announced Ramp Intelligence, its set of GPT-enabled features built on top of OpenAI’s models that will deliver pricing intelligence, accounting automation, and a Copilot for finance teams. Ramp’s business model continues to rely on interchange as it executes on a TAM expansion strategy of eating into adjacent categories of corporate spend such as travel, IT, with better margins. Interchange has continued to sustain Ramp’s unrivalled product velocity as it executes on this strategy, but will it be sufficient to subsidise the cost of delivering LLM capabilities to its customers?

Arguably, this is where there is a clear demarcation. Relative to Intercom’s summarisation use case, Ramp Intelligence’s purported cost-savings (e.g. saving customers 20% of their time on manual coding of expenses or 17.5% on software contracts) provide a clear business case to start charging for these features.

Nearly half of horizontal application software companies have pure usage (16%) or hybrid pricing (32%) models. Given OpenAI’s pricing of c. $0.002 per 1,000 tokens for API calls, the addition of AI capabilities to product suites will pose several pricing questions for application builders.

If the ratio of value creation to cost for a specific feature is below a certain threshold, how should application builders weigh up the unit economics of said feature versus the downstream second-order revenue (expansion, referrals) of the feature working in combination with others?

Will time and usage-based trials prevail over freemium models? The LLMs’ compression of time-to-value will further boost their already superior conversion rates.

How does one approach feature gating when it comes to AI features, particularly for the enterprise? Perhaps by counter-positioning against vendors of choice for prosumers by layering on security, privacy compliance and observability, much like Box counter-positioned against Dropbox.

They invested in security, compliance, and admin panels, positioning themselves as the “Enterprise Dropbox,” making early inroads into this market.

“Box was first to market with an administration console. And that became like a really important differentiator for us. We eventually started marketing it as a solution to your Dropbox problem.”

I’d love to hear how your thoughts on how the cost of inference will be distributed in the value chain among participants, from the foundational model companies all the way down to the end user.

What I’m Reading

Breaking the GTM code: Slack's biggest secrets finally revealed

The great folks at Topofthelyne lifted the curtain on Slack’s scalable infrastructure behind their incredibly successful expansion motion - all the more important when you consider that the top quartile of companies will derive 40% of growth from existing customers.

Xero: Building Your Platform The Right Way

The Tidemark team’s excellent Vertical SaaS Knowledge Project series includes interviews such as this one with Xero, where they touch on how this innocuous business from Australia managed to reach 3 million-plus customers doing 4 trillion-plus transactions a year. Hint: a thriving partner ecosystem.

Who’s Doing Well This Week: Dynatrace, HubSpot, Atlassian, Monday and More

Amidst the doom and gloom of RIFs and vendor consolidation, it’s heartening to see both infrastructure and application software behemoths post such impressive growth rates.

Google I/O and the Coming AI Battles

Ben Thompson masterfully analyses Google’s recent I/O announcements and their implications for AI’s trajectory as a sustaining or disruptive innovation.

The Geopolitics Of AI Chips Will Define The Future Of AI

This is a stirring read from Radical Ventures Partner Rob Toews on the precarious position of TSMC, arguably the most important node in the global AI ecosystem. Once you appreciate the sheer gap between TSMC and its closest competitors, it’s deeply unsettling to ponder the ramifications.