Forward Deployed Engineers: A Means To An End For AI Startups

Capturing Business Logic And Expert Reasoning

Hey friends, I’m Akash!

Software Synthesis analyses the intersection of AI, software and GTM strategy. Join thousands of founders, operators and investors from leading companies for weekly insights.

You can always reach me at akash@earlybird.com to exchange notes!

What’s old is new again: AI-native software companies are combining lessons on distribution from prior eras of enterprise software in the new paradigm where purchasing is increasingly a top-down decision.

Last year, Ben Thompson mused on the right analogy for AI being how enterprise computing and mainframes replaced humans doing accounting and enterprise resource planning.

Most historically-driven AI analogies usually come from the Internet, and understandably so: that was both an epochal change and also much fresher in our collective memories. My core contention here, however, is that AI truly is a new way of computing, and that means the better analogies are to computing itself. Transformers are the transistor, and mainframes are today’s models. The GUI is, arguably, still TBD.

To the extent that is right, then, the biggest opportunity is in top-down enterprise implementations. The enterprise philosophy is older than the two consumer philosophies I wrote about previously: its motivation is not the user, but the buyer, who wants to increase revenue and cut costs, and will be brutally rational about how to achieve that (including running expected value calculations on agents making mistakes). We went from:

To:

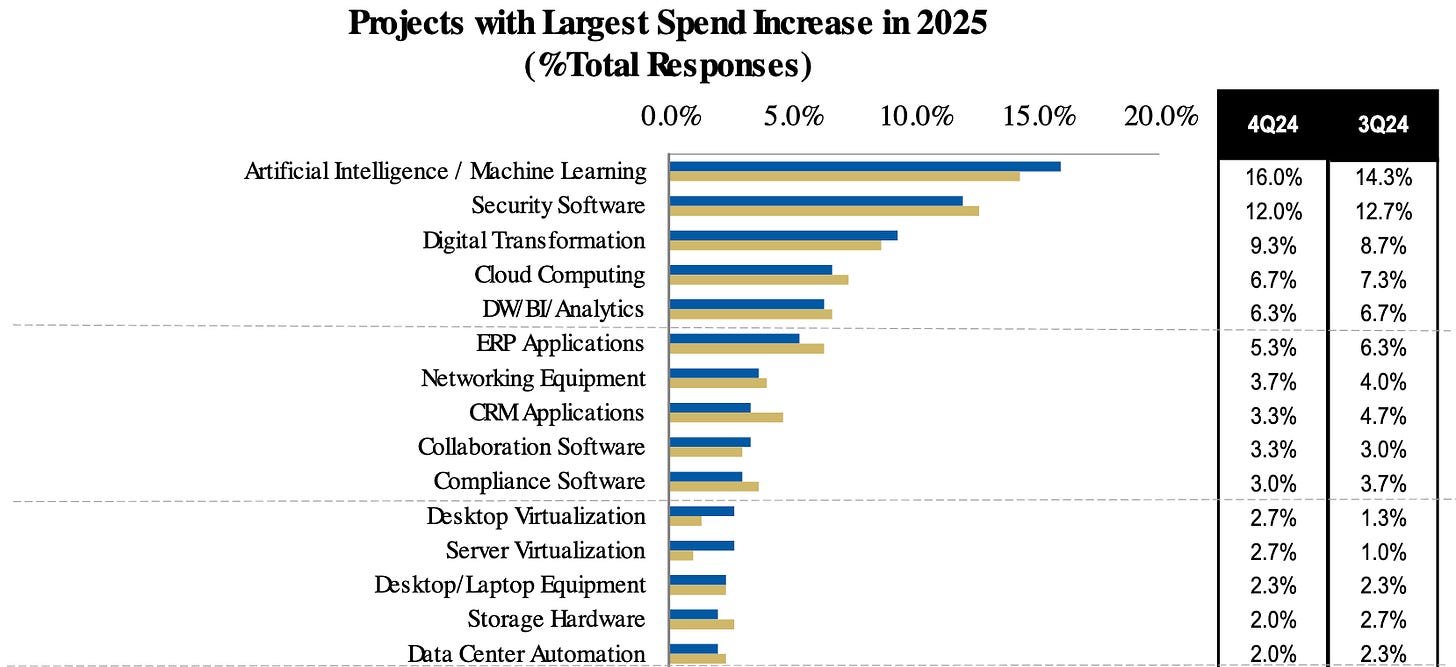

Notice how well this framing applies to the mainframe wave of computing: accounting and ERP software made companies more productive and drove positive business results; the employees that were “augmented” were managers who got far more accurate reports much more quickly, while the employees who used to do that work were replaced. Critically, the decision about whether or not make this change did not depend on rank-and-file employees changing how they worked, but for executives to decide to take the plunge.And executives are certainly prioritising investments in AI/ML.

Ajay Agarwal of BCV has written a similarly excellent set of reflections on how AI-native startups can emulate the enterprise sales playbooks of the past:

Every Fortune 500 company CEO has an AI counsel that is tasked with bringing in new technologies. These mainstream companies want a trusted advisor that can help guide them on the best way to adopt AI – this includes not just the technology but the process for adoption, change management and continuous learning.PLG flourished in a zero-interest rate environment that coincided with the consumerisation of enterprise software as employees sought the same consumer-grade experiences in business application software that they had in their daily lives.

AI’s scope and potential bottom and top-line impact necessitates a top-down sale to executives who are scrambling to ensure their companies are ‘AI winners’.

The forward deployed model of top-down selling is upending the SaaS GTM playbooks that were written for CRUD apps with zero marginal costs in the 2010s. For agents to work in production, they rely on the last mile of business logic and expert reasoning tokens that can only be captured with a services-first approach.

Forward Deployed As A Means To An End

Canonical startup advice like ‘Do things that don’t scale’ from Paul Graham is generally aimed at pre-PMF companies - First Round Capital, for example, encourages founder at the ‘nascent’ stage of product-market fit to invest however much in services that it takes to get customers to value.

“What worked best was part product, part service. We used the demo as a chance to build a proof of concept, so we didn’t have a dummy sales pitch version — we always asked the prospect for an actual dataset to play with.

Then it was a very quick forward-deploy. We would come in and do a free trial where we would set up the software and teach them how to use it. And then we would watch for engagement,” he says. “Only when there was engagement would we close the deal. We had almost no early churn because we only sold customers who got the value out of it.”

Lloyd Tabb, Looker Founder and CTOTherefore, we created a team of data analysts to serve as our technical customer-facing teams (sales engineers, customer support, and implementation analysts) to quickly deploy and develop instances of Looker for our customer.

Keenan Rice, founding teamAs we’ve previously discussed, trust and value are intrinsically linked in the age of AI, which is why implementation matters so much more in this new paradigm, and not just at the pre-PMF stage.

In the age of AI, implementation matters – not just upfront, but over time. Whether these professionals are called PS (professional services) or FDEs (forward-deployed engineers), the function is the same: help customers with ingesting data, tuning the models and iterating on the efficacy of the solution.

Ajay AgarwalOpenAI hiring FDEs is testament to the fact that AI is a general purpose technology and that like Palantir’s FDE model, getting customers to value depends as much on problem discovery as on the technology.

Sometimes, Palantir is an anti-fraud tool; sometimes, it's a compliance tool; sometimes, it's for managing supply chains to increase production and control emissions.

That's a broad set of use-cases, but products with similarly broad end markets also think this way.

OpenAI is hiring forward-deployed engineers for roughly the same reason—if OpenAI is a company that sells token predictions, executives don't know what to do with it; if it's a company that answers customer support questions, moderates a forum, helps write code, summarizes emails, etc., it's a company whose use cases are too broad to connect to any specific customer.

Byrne HobartThe delays in AI going into production in the enterprise is less a technological problem and more of an organisational problem. Ben Thompson’s analogy of how corporates failed to realise the value of Meta’s precision advertising is apt:

What will become clear once AI ammunition becomes available is just how unsuited most companies are for high precision agents, just as P&G was unsuited for highly-targeted advertising. No matter how well-documented a company’s processes might be, it will become clear that there are massive gaps that were filled through experience and tacit knowledge by the human ammunition.That’s ultimately what AI-native companies are attempting to do: collect tacit knowledge, transform them into reasoning tokens, and then deliver outcomes (via several vectors of model customisation such as fine-tuning, RAG, prompt engineering) - in the case of AI agents, the outcomes are economically valuable tasks.

FDEs at AI-native companies are effectively frontline AI engineers.

Tyler Cowen has a wonderful saying, ‘context is that which is scarce’, and you could say it’s the foundational insight of this model. Going onsite to your customers – the startup guru Steve Blank calls this “getting out of the building” – means you capture the tacit knowledge of how they work, not just the flattened ‘list of requirements’ model that enterprise software typically relies on.

Nabeel QureshiThe notion that business applications exist, that's probably where they'll all collapse right in the agent era because if you think about it they are essentially CRUD databases with a bunch of business logic.

The business logic is all going to these agents and these agents are going to be multi- repo CRUD right so they're not going to discriminate between what the back end is they're going to update multiple databases and all the logic will be in the AI tier so to speak um and once the AI tier becomes the place where all the logic is then people will start replacing the back ends

Satya NadellaIf foundation models are the first mile, the last mile of business logic and expert reasoning tokens will be prime real estate for startups.

There are several ways I’m seeing companies approach this.

Sierra has ‘agent engineers’ that are responsible for systems integration, agent supervision and agent extensibility - they work closely with technical counterparts to comprehensively scope and understand domain-specific details to ship production-grade agents.

Other approaches include:

Onboarding teams being on-site and documenting reasoning processes

In-house labelling efforts, hiring domain practitioners

Using models to quiz experts on various permutations, extracting as much tacit knowledge as possible instead of relying purely on observations

These are all part of the post-sales implementation process and are prerequisites for for the shiny demo to work in production and earn more trust from buyers. Post-deployment, RL engineering will be equally important to amass a defensible dataset of reasoning + feedback tokens.

A baseline of domain knowledge will be key in early hires for AI startups to successfully capture expert reasoning tokens. This isn’t an easy role to hire for - the combination of differing degrees of domain knowledge, AI engineering and customer-facing experience is hard to find, but will become more important to AI-native startups than the SaaS companies that came before.

Internalising this sequence, the case for more services-heavy businesses to productise and earn software-like margins becomes clearer. A FDE approach may be deployed in each vertical a company pursues, followed by productisation after enough reasoning/business logic tokens have been collected from N customers. If Palantir can attain best-in-class software margins, why wouldn’t AI-native startups be able to do the same, especially if the quality of the outcomes heavily depends on the inputs derived from the forward deployed approach?

Thank you for reading. If you liked this piece, share it with your friends.