First Principles Of Product In The AI Age

Escaping skeumorphism and building new cars instead of faster horses

Join over 2,200 founders, operators and investors for Missives on software strategy by subscribing here.

Skeumorphism was a term often used to describe the disappointing incrementalism of many crypto applications; truly realising the technology’s disruptive potential required fundamentally rethinking products and experiences for consumers.

ChatGPT defines Skeumorphism as:

Skeuomorphism is a design approach in which objects or interfaces are made to resemble their real-world counterparts or analogues, even though such resemblances may not be strictly necessary or functional in a digital environment.

ChatGPT was certainly a catalyst for mainstream consciousness about AI, but the flowering of applications and developer tooling has been underway for some time, even if it feels like the match was only lit after OpenAI managed to fine-tune GPT-3 into a conversational chatbot.

Among the most discussed topics is the question of value capture - unlike previous platform shifts (cloud, mobile, PC), the early innings of the AI revolution are foreshadowing an oligopolistic market structure with incumbent tech giants placing bets at various layers of the stack.

Growth stage scale-ups (e.g. Notion, Airtable, Navan, Databricks) are following suit and armouring their products with AI enhancements in order to fend off point solution invaders.

This all begs the question of how new applications can mount a sustained charge at capturing market share and climbing moat-trajectories. Most arguments boil down to demand-side economies of scale and verticalisation - custom, domain-specific models trained on proprietary or vertical-specific data outperform larger generalisable models, and further entrench this superiority through RLHF.

This is certainly the conventional path that many companies will go down, but it’s important to zoom out and acknowledge the technology’s ability to redefine existing paradigms.

Howard Lerman, CEO of Roam and previously CEO of Yext, recently told Sam Blond about his approach to customer interviews:

When you test your product out with people, listen to their problems. Learn everything you can from them. When you deeply understand their pain, you can then come up with solutions they might not be able to see.

Howard refrained from asking customers about what solutions they were after and instead singularly focused on the problems they had. Customers naturally skew towards skeumorphism - it’s the entrepreneur’s mission and responsibility to devise novel solutions that are radically better than the status quo.

If Henry Ford listened to his customers, he would have spent his time trying to build a faster horse instead of inventing the first Ford engine.

The Henry Ford analogy is arguably a fitting one for the present position we find ourselves on in the AI cycle, where existing digital utilities (e.g. email, project management, forecasting) are simply retrofitting LLM capabilities into their product.

Pratyush Buddiga of Susa Ventures posed this precise overarching question of the current crop of AI applications:

I’ll end with a provocative question a friend posed to me: are current implementations like ChatGPT and Sydney just a faster horse instead of a brand-new car?

Indulging in skeumorphism is certainly a fair critique of incumbents, but to some extent even a large proportion of the Generative AI-native companies that have emerged in recent months are guilty of linear product design.

New application companies building with orchestration capabilities and plug-ins for multi-modal models will eclipse the narrow co-pilot and chatbot nails of the proverbial AI hammer. Entrepreneurs will have to exercise first principles reasoning around what form factor addresses customer problems and capitalises on the technology’s latent potential.

Fidji Simo, CEO of Instacart, recently described how this mode of thinking was pervasive during her time in Facebook’s mobile team to Patrick O’Shaughnessy:

And there was a clear contrast between the companies that were embracing the shift to mobile by taking their web experience and desperately trying to shrink them on a small screen versus the companies that were really using all of the tools that a mobile device had from location awareness to ability to take pictures and all of that to create a really unique mobile-first experience.

The rate at which application software incumbents like Intercom, Atlassian and others have released AI features is in keeping with the incumbent value capture narrative, but a valid counterargument is that the product scope that AI can permeate at these companies is much narrower than founders redesigning consumer experiences from scratch. There are more explicit cases like search where widening the scope of the technology cannibalises one’s existing business model, but even in less explicit cases it’s clear that divorcing from existing UIs or workflows would be too destabilising to countenance.

Would you rebuild the same? Or would you rebuild it in a completely different way? Would you have conversational experience instead of having a search box?

And I think we're going to see the exact same thing with AI. I would encourage companies to think from first principles, with this new tool set, would you rebuild your products in a completely different way.

Long-time readers of my writing will be familiar with my penchant for the Jobs-To-Be-Done framework from Clayton Christensen - customers hire software to do a job, as much as we like to naval-gaze about applications. This framework is as true of AI as preceding software epochs:

Because if you think about commerce fundamentally, it is a pretty weird experience online because you already need to know exactly which products you want, go into a search box, type that product, select that product, add it to a cart. It is not how people think about their lives. They've gotten used to it because that's all we could do with the tools that we had.

Fidji goes on to describe consumers’ natural dispositions when thinking of JTBD:

But fundamentally when people think about feeding their families, they think, okay, I have a budget of $200 for 10 meals. I have a family of five. One of them has food allergies. We all like Mexican food. What can I make this week with all of these constraints? That's the way you think about managing your family.

She may have inadvertently described a potential objective for an autonomous agents, one of the nascent applications eliciting euphoria among developers.

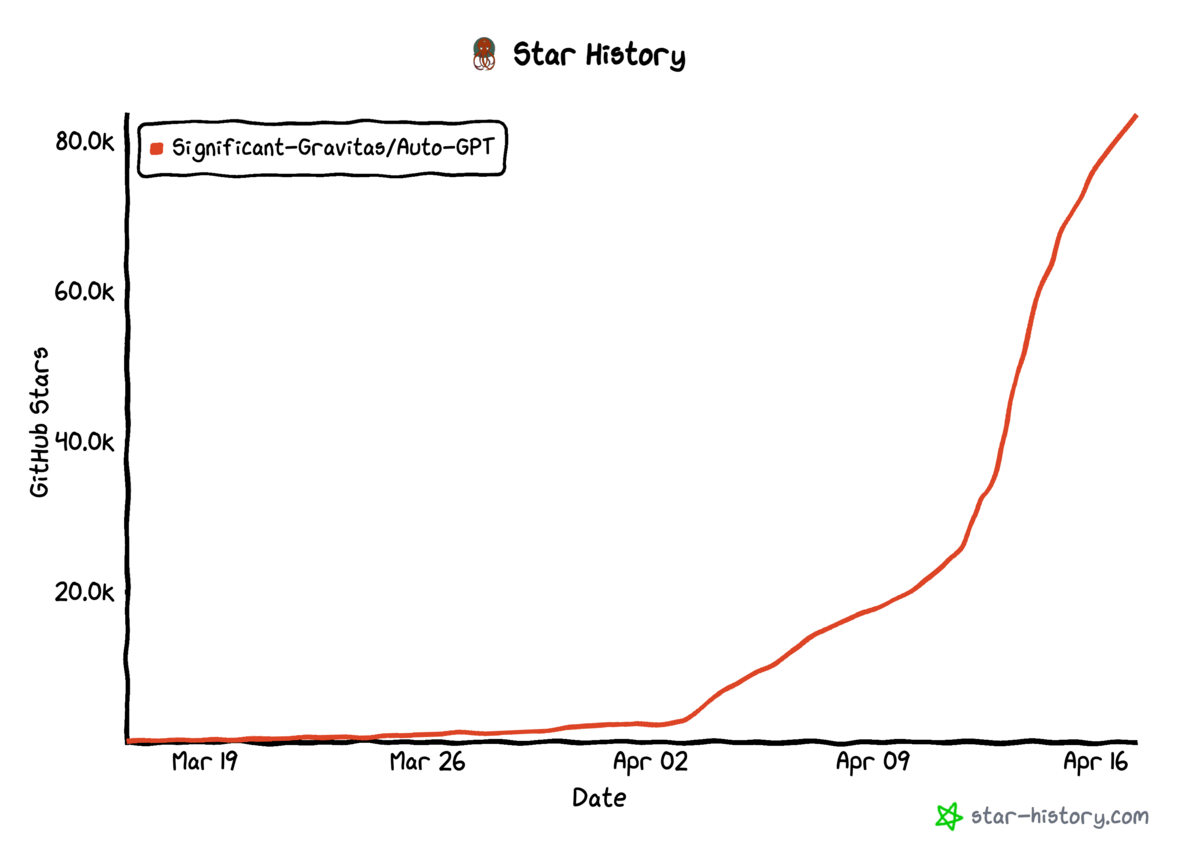

AutoGPT is the first of many autonomous agent frameworks to come and at the time of writing it’s one of the fastest growing codebases on Github (far outpacing other open-source AI projects).

Shawn Wang drew a compelling analogy between autonomous agents and self-driving cars, noting the gradual but virtually certain progress ahead of us:

It's time to understand that self-driving AI agents in 2023 are just about where self-driving cars were in ~2015. We are beginning to have some intelligence in the things we use, like Copilot and Gmail autocompletes, but it's very lightweight and our metaphorical hands are always at ten and two.

In the next decade, we'll want to hand over some steering, then monitoring, then fallback to AI, and that will probably map our progress with autonomous AI agents as well.

In the following decade, we’ll develop enough trust in our agents that we go from a many-humans-per-AI paradigm down to one-human-per-AI and on to many-AIs-per-human, following an accelerated version of the industrialization of computing from the 1960s to the 2010s since it is easier to iterate on and manipulate bits over atoms.

For entrepreneurs, this is a window to escape the throes of incrementalism and reshape markets in profound ways.

What I’m Reading

Pratyush Buddiga of Susa Ventures making a case for Vertical Software

Pratyush’s self-admitted evolution from software sceptic and hard tech advocate to champion for SMB vertical software is a well-written argument for how software for the average small business can deliver compounding excellence.

Airplane CEO Ravi Parikh defending OpenAI’s moat

The leaked Google memo triggered a frenzy of discourse on the destiny of closed-source versus open-source models and whether performance gaps will persist indefinitely. There’s lots of nuance here and Ravi makes a compelling case for why Foundational Model companies like OpenAI (in particular) have already formed considerable moat trajectories, not least the last mile problem.

Foundation Models are going multimodal from Twelve Labs

Twelve Labs chronicle developments across different modalities and model architectures, but what struck me in particular is the section discussing Chinchilla optimal model sizes. This is very topical at the moment with the attention going towards smaller models trained with bespoke datasets, with different opinions on whether Chinchilla optimal is optimal at all.

Sarah poses valid questions around the sudden emergence of the LLMOps software category and what separates it from a transient market. Ultimately, the teams that are closest to customers and have a pulse for their problems will be best placed to adapt as the floor shifts beneath their feet.