Hey friends 👋! Join over 2,200 founders, operators and investors for Missives on software strategy by subscribing here:

To the regular readers, if you enjoyed today’s piece please share, comment and subscribe.

The AI summer is reviving discussions about moats in software.

Tomasz Tunguz recently spoke to Jason Lemkin about moats for Generative AI companies, identifying better models, usage flywheels, and private data as the most plausible vectors of defensibility. Elad Gil published a comprehensive list of moats whilst Kyle Harrison highlighted the importance of product velocity and moat trajectories, affirming that moats develop over time.

These debates will persist for as long as AI represents a trillion-dollar platform shift (a very long time), but one type of moat that protected Snowflake's superiority in data warehouses may be just as applicable to AI infrastructure.

“Snowflake didn’t have 1000 coders or 1000 generalists. It had probably 5 of the world’s 20 database experts build it from scratch. That’s what makes Snowflake unique”. For any one company to steal that level of talent would be extremely challenging. Nandu Anilal

Databases take time to build, as Redpoint General Partner Satish Dharmaraj explained to Logan Bartlett on his show last week. Redpoint paid a premium price for Snowflake’s seed round, fully aware that Redshift was one of AWS' fastest growing products:

We just fell in love with the founders. These three French engineers were the best. World class.

Back in 2013, it was a bold decision to go all in on a cloud data warehouse in the face of overwhelming evidence that on-premise was here to stay. Redpoint invested pre-product in co-founders Benoit Dageville, Thierry Cruanes, and Marcin Zukowski.

The decision boiled down to the team.

Nandu Anilal of Canaan described cornered resources as a moat that software businesses benefit from by having preferential access to a valuable and scarce resource, i.e. talent. Snowflake's data warehouse product was one such instance where preferential access to the very best database engineers starved competitors of the talent required to match the velocity and quality of the product.

Snowflake was incubated by Mike Speiser at Sutter Hill Ventures; Benoit, Thierry and Marcin were coming out of Oracle at the time, driven by ‘their frustration with the internal dynamics they confront at legacy incumbents’ (h/t Kevin Kwok). Kevin Kwok’s fantastic essay describing Mike Speiser's incubation philosophy at Sutter Hill, analyses the archetype of founders Mike seeks out, exemplified by Snowflake’s founders:

Snowflake’s founders are cut from a different cloth. As Benoit Dageville put it “We never thought of it as building a company. We just wanted to build a cloud product. The company was an afterthought.” Yet, their product and technical decisions have been prescient in threading the narrow path to taking on Amazon and Google in the most important core markets of cloud computing.

Mike Speiser has repeatedly capitalised on this talent arbitrage at Sutter Hill to build enduring companies at the vanguard of secular shifts.

In the foundation model layer of AI, cornering similarly exceptional talent may end up presenting equally formidable moats.

Microsoft Research vice president Peter Lee acknowledged the spiralling cost of hiring AI researchers:

“The cost of a top AI researcher had eclipsed the cost of a top quarterback prospect in the NFL.”

OpenAI's entreaty to talent was “the chance to explore research aimed solely at the future instead of products and quarterly earnings”, helping them hire esteemed talent from Nvidia, Google and Meta who had similar mission-oriented ambitions as those of the Sutter Hill mould.

OpenAI's talent density saw it leapfrog ahead of competitor AGI research labs, but as capital has flooded into AI this scarce resource has become the subject of intense battles; Anthropic and Adept were both founded by OpenAI alum. Deepmind, Google's biggest asset in the AGI race, has reportedly lost Igor Babuschkin to Elon Musk's rumoured new OpenAI competitor. Our portfolio company, Aleph Alpha, recruited AI veterans and serial entrepreneurs to build a European challenger to OpenAI.

Reciting the traits of research excellence in AI is far beyond the scope of this post, but it's increasingly evident that cornering and retaining the very best research talent will be decisive to where market share will accrue.

Interesting Reads

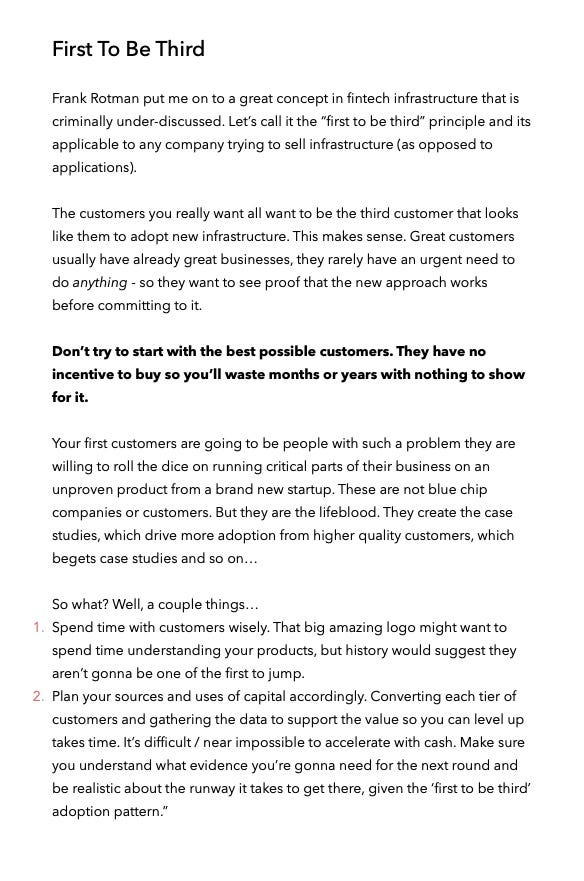

Julian Rowe, Partner at LocalGlobe and Latitude, makes a compelling case for why fintech businesses’ scalable infrastructure and cost structures will reap rewards in the long-run.

Jerry Neumann’s first principles writing on software moats is indelibly good - this taxonomy is one that will stick.

The Complete Beginners Guide To Autonomous Agents

Registering the speed at which autonomous AI agents are developing is both tantalising and frightening.

Why Every Startup Needs An AI Strategy

Tomasz Tunguz points out one of the more profound changes under way - the irreversible change in consumer expectations of software, with respect to AI’s capabilities.

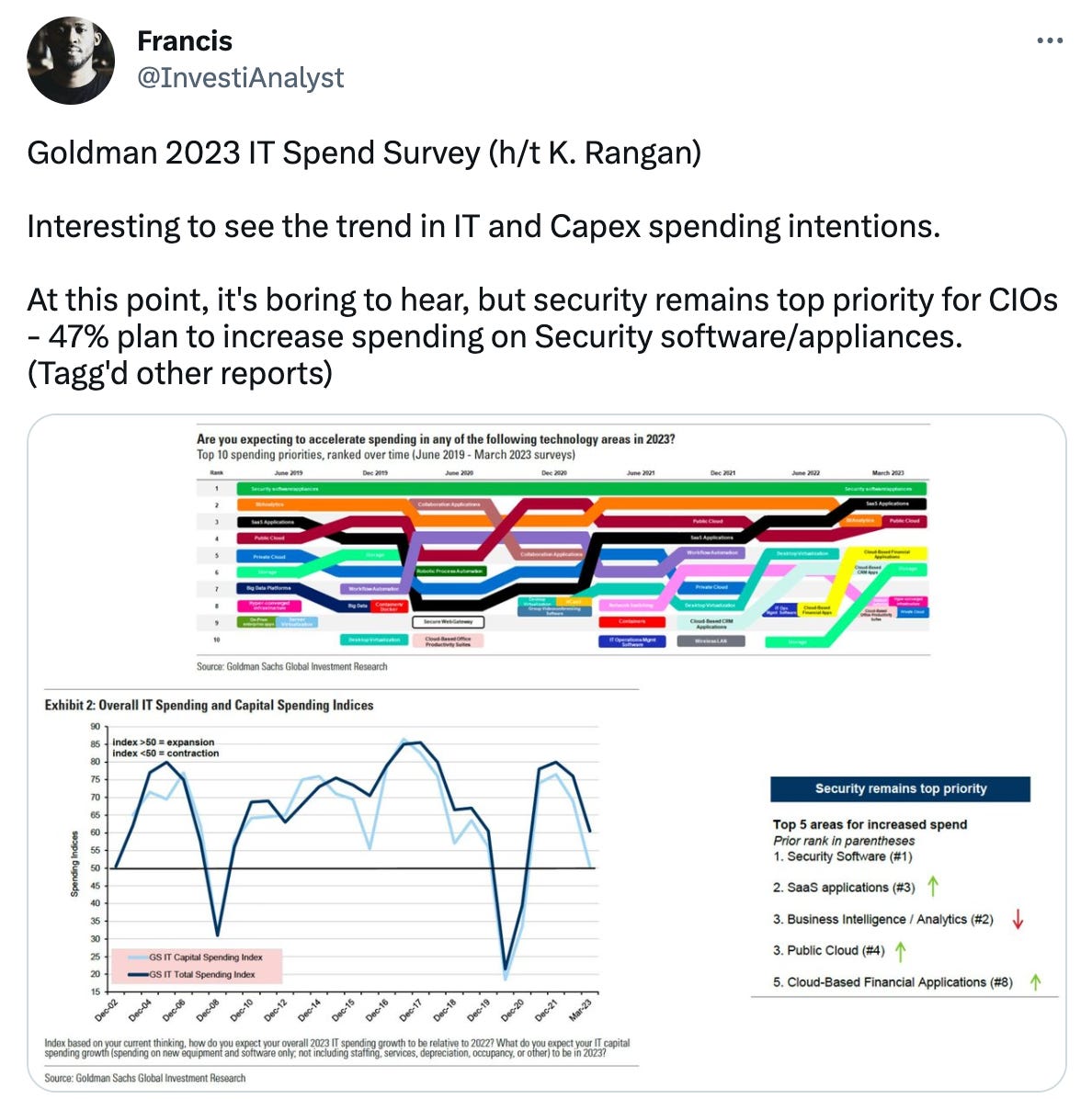

Tweets